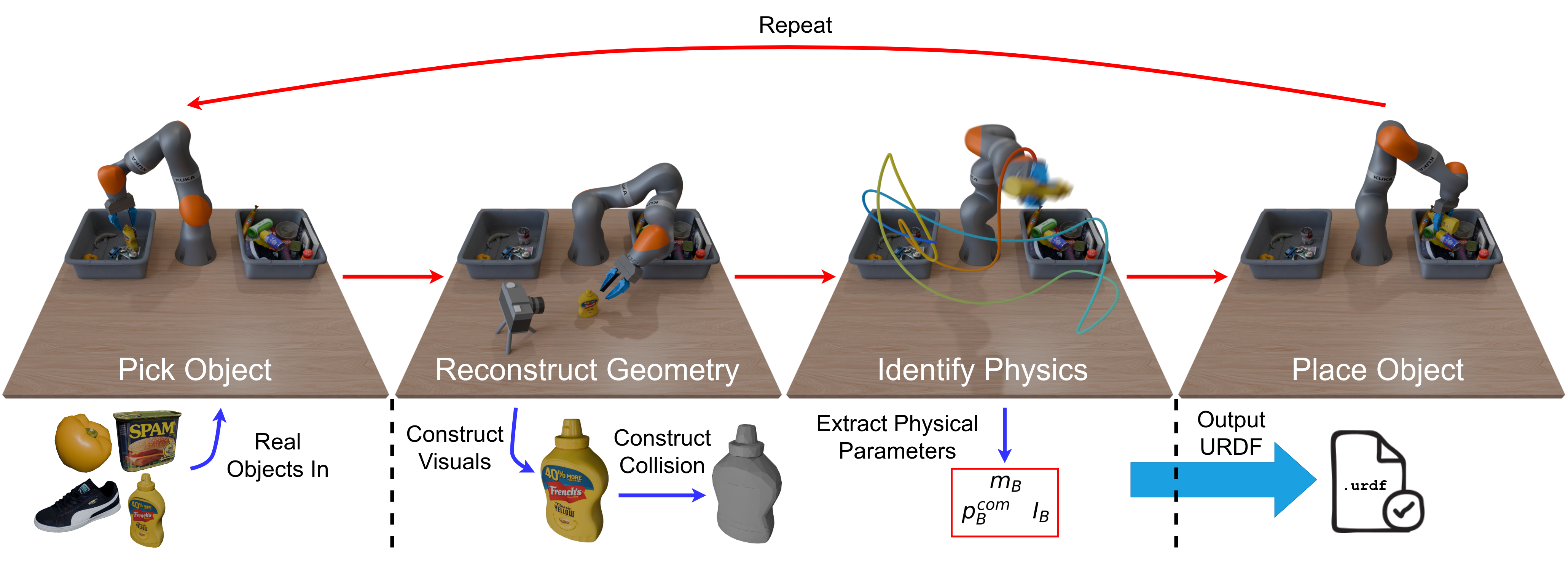

Simulating object dynamics from real-world perception enables digital twins and robotic manipulation but often demands labor-intensive measurements and expertise. We present a fully automated Real2Sim pipeline that generates simulation-ready assets for real-world objects through robotic interaction. Using only a robot’s joint torque sensors and an external camera, the pipeline identifies visual geometry, collision geometry, and physical properties such as inertial parameters. Our approach introduces a general method for extracting high-quality, object-centric meshes from photometric reconstruction techniques (e.g., NeRF, Gaussian Splatting) by employing alpha-transparent training while explicitly distinguishing foreground occlusions from background subtraction. We validate the full pipeline through extensive experiments, demonstrating its effectiveness across diverse objects. By eliminating the need for manual intervention or environment modifications, our pipeline can be integrated directly into existing pick-and-place setups, enabling scalable and efficient dataset creation.

Objects are placed in the first bin, where the robot picks them up and reconstructs their geometries by moving them in front of a static camera while re-grasping to reduce occlusions. Next, the robot identifies the object's physical parameters by following a trajectory designed to be informative for the inertial parameters. Finally, it places the object into the second bin and repeats the process with the next object. The extracted geometric and physical parameters are combined to generate a complete, simulatable object description.

Examples of interactions with our reconstructed assets in simulation and the original objects in the real

world.

"Default Physics" refers to using our identified mass but not specifying a center of mass and rotational

inertia, resulting in the simulator using the default values for these parameters.

INFLATOR

SPAM CAN

CAMERA MOUNT

UNITEK BOX

SUGAR BOX

MOUTHWASH

Drake simulation recordings that demonstrate the simulatability of our assets.

We recommend interacting with the simulation recordings:

First, click the Open Controls button at the top right corner to open the recording controls. Second, click the now visible Scene button to collapse the unnecessary options. Third, click the play button to play the recording from the start. The playback speed can be adjusted using the timeScale input. It is possible to move around the simulation recording using mouse controls. If you see "No connection", wait a few seconds for the simulation to finish loading.

A video showcasing our robot operating fully autonomously for 39 minutes!

Small breaks occur when we refill the bin with new objects. The version

below is sped up 5x for a more engaging viewing experience. For the full 1x

speed version, see the link below.

Find the full, non sped-up version here

@article{pfaff2025_scalable_real2sim,

author = {Pfaff, Nicholas and Fu, Evelyn and Binagia, Jeremy and Isola, Phillip and Tedrake, Russ},

title = {Scalable Real2Sim: Physics-Aware Asset Generation Via Robotic Pick-and-Place Setups},

year = {2025},

eprint = {2503.00370},

archivePrefix = {arXiv},

primaryClass = {cs.RO},

url = {https://arxiv.org/abs/2503.00370},

}